Modelling Sequence Data

Some task where the input is of sequential nature:

- speech recognition

- machine translation

- language modeling

- sentiment analysis

- video data

How should we choose our model? Again, FCN are universal, but the size of the model can grow too much.

If our sequence is long the FCN would become prohibitively large: Idea input one at a time, re-use the same weights! With this idea we don’t care about the sequence length .

To memorize prviously information, we employ a recurrent mechanism.

we can unfold this formula times.

A simple RNN model:

Training an RNN

It’s like we arte backpropagating through time. Just unfold the network! The loss is just the sum:

for simplicity let’s assume that there is no bias and that the activation function is the identity. Then

Let , then

but in our simple case

so that

things could get ugly if is big: if the greatest eigenvalue norm is greater than we get exploding gradient, if it’s less than vanishing gradient.

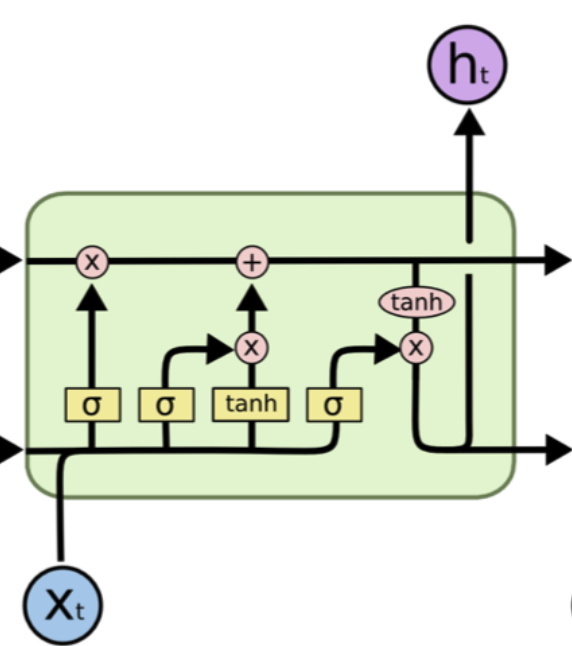

LSTM

Long-Short-Term-Memory

Past input contribute less and less to the loss. We need to add memory!

Main ideas

- a new hidden state called cell state, with the ability to store long-term information.

- LSTM can read, write and erase information from a cell.

- Gates are defined to get the ability to select information, they are also vector of length .

- At each time step the gates can be open (1) or close (0), or somewhere in between.

Forget Gate

Controls what is kept vs forgotten from previous cell state.

Input Gate

Controls what parts of the new input are written to the cell.

Output Gate

Controls what parts of the cell state is trasnfered to the hidden state.

New Cell content

We update the content of the cell and the hidden state usins the gates

forget and write

the new hidden state uses the output gate

GRU

Gated Recurrent Units It doesn’t use a cell state, but it has gates!

Reset Gate

controls what parts of previous hidden state are used to compute new content

Update Gate

controls what parts of previous hidden state are used to compute new content

We use the gates very similarly to create a new hidden state: