The self-supervised task, also known as pretext task, guides us to a supervised loss function, e.g. by a Proxy task:

- Rotate: train a model to classify which rotation has been aplied, the idea is that this model need to learn a deep representation of the object

- Relative position: choose a patch, give one of its neighbouring patches, let the model find where the patch was (classification)

- jigsaw puzzle: a more difficult task than the previous: shuffle the patches, the model need to find the permutation.

- Colorization: from grayscale to color.

Self-supervision generates features that are good for downstream tasks.

For example in language modelling, we can predict a part of the input as a target.

Contrastive learning

Learn representation such that similar samples are close to each other, dissimilar one are far.

Used in visio and language tasks, for example face autentification.

Just build a clever loss function:

if the labels are equal, minimize le distance squared, if they are different, maximize the distance. Since the loss function need to be bounded from below, we introduce the max. Also we are fine if the distance is more than , a positive “tollerance” parameter.

Triplet Loss

Introduced in 2015 in FaceNet, the task was recognition of the same person at different poses and angles.

Given one anchor input , we select a positive sample and a negative one .

Our objective is to minimize the triplet loss:

BYOL

It doesn’t use negative example! Two identical networkds, online and target. We feed the same image, but with two different data transformation.

The Loss is the cosine similarity between the networks (rescaled). The weights of the target network are a time average of the weights of the online network:

we update online the weights of the online model.

After training one the online model is used, and the projection layer discarded.

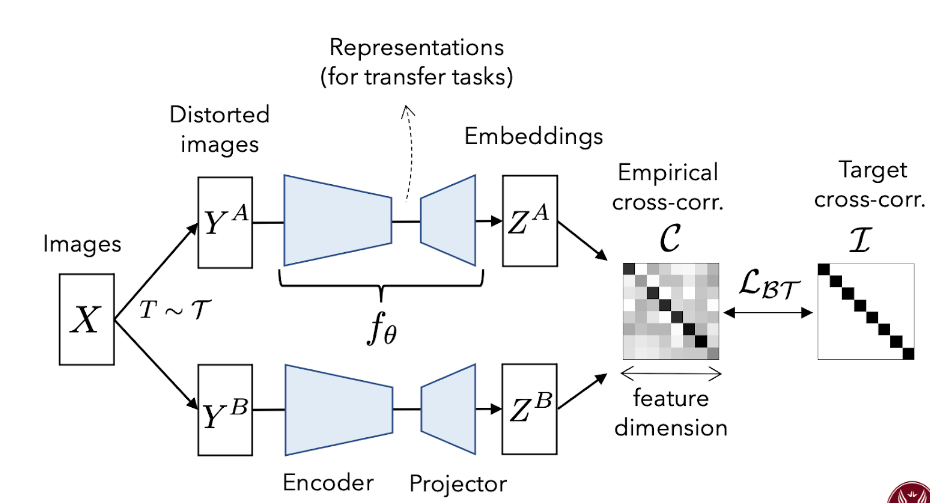

Barlow Twins

Again two identitcal networks but we feed the same image with a different transformation. The goal is to make the empirical cross-correlation matrix as close as possible to the identity.

where